Unknown Delay#

In this section, we are concerned with a signal whose precise time of arrival at the receiver, i.e., the transmission delay, is unknown.

This type of problem arises naturally in radar systems, but it also occurs often in some communications systems where transmissions of data or synchronization probes are emitted in erratic bursts.

The unknown time of arrival is denoted by an analog random variable \( t_0 \) quantized to \( t_l \).

Under hypothesis \( H_i \), the continuous-time received signal is

Since the time of arrival is unknown, it is generally necessary to observe \( y_i(t) \) for a period of time in excess of the limits.

Nonetheless, we can still use this signal expression as a model for times preceding \( t_0 \) and for times after \( T + t_0 \) by using appropriate amplitude and phase functions \( a_i(t) \) and \( \phi_i(t) \).

import numpy as np

import matplotlib.pyplot as plt

# Define the time points

t_A = 0 # Earliest arrival time

t_B = 6 # Latest arrival time

T = 8 # Duration of observation window

t_0 = np.random.uniform(t_A, t_B) # Uniformly distributed random t_0

# Create the figure and axis

fig, ax = plt.subplots(figsize=(12, 6))

# Draw the timeline

ax.hlines(0, t_A - 1, t_B + T + 1, color="black", linewidth=1)

ax.scatter([t_A, t_0, t_B, t_0 + T], [0, 0, 0, 0], color="black")

# Label the points

ax.text(t_A, 0.1, "$t_A$", fontsize=12, ha='center')

ax.text(t_0, 0.1, f"$t_0 = {t_0:.2f}$", fontsize=12, ha='center', color="blue")

ax.text(t_B, 0.1, "$t_B$", fontsize=12, ha='center')

ax.text(t_0 + T, 0.1, "$t_0 + T$", fontsize=12, ha='center', color="red")

# Draw the signal p(t_0) arrow

ax.arrow(t_A, -0.2, t_B - t_A, 0, head_width=0.05, head_length=0.2, fc='blue', ec='blue')

ax.text((t_A + t_B) / 2, -0.4, "$p(t_0)$ (Uniform)", fontsize=12, ha='center', color="blue")

# Draw the duration of y_i(t) arrow

ax.arrow(t_0, 0.2, T, 0, head_width=0.05, head_length=0.2, fc='red', ec='red')

ax.text(t_0 + T / 2, 0.4, "duration of $y_i(t)$", fontsize=12, ha='center', color="red")

# Format the plot

ax.set_ylim(-1, 1)

ax.set_xlim(t_A - 1, t_B + T + 1)

ax.axis('off')

# Show the plot

plt.title("Conceptual Description of Variable Time of Arrival with Uniform $t_0$", fontsize=14)

plt.show()

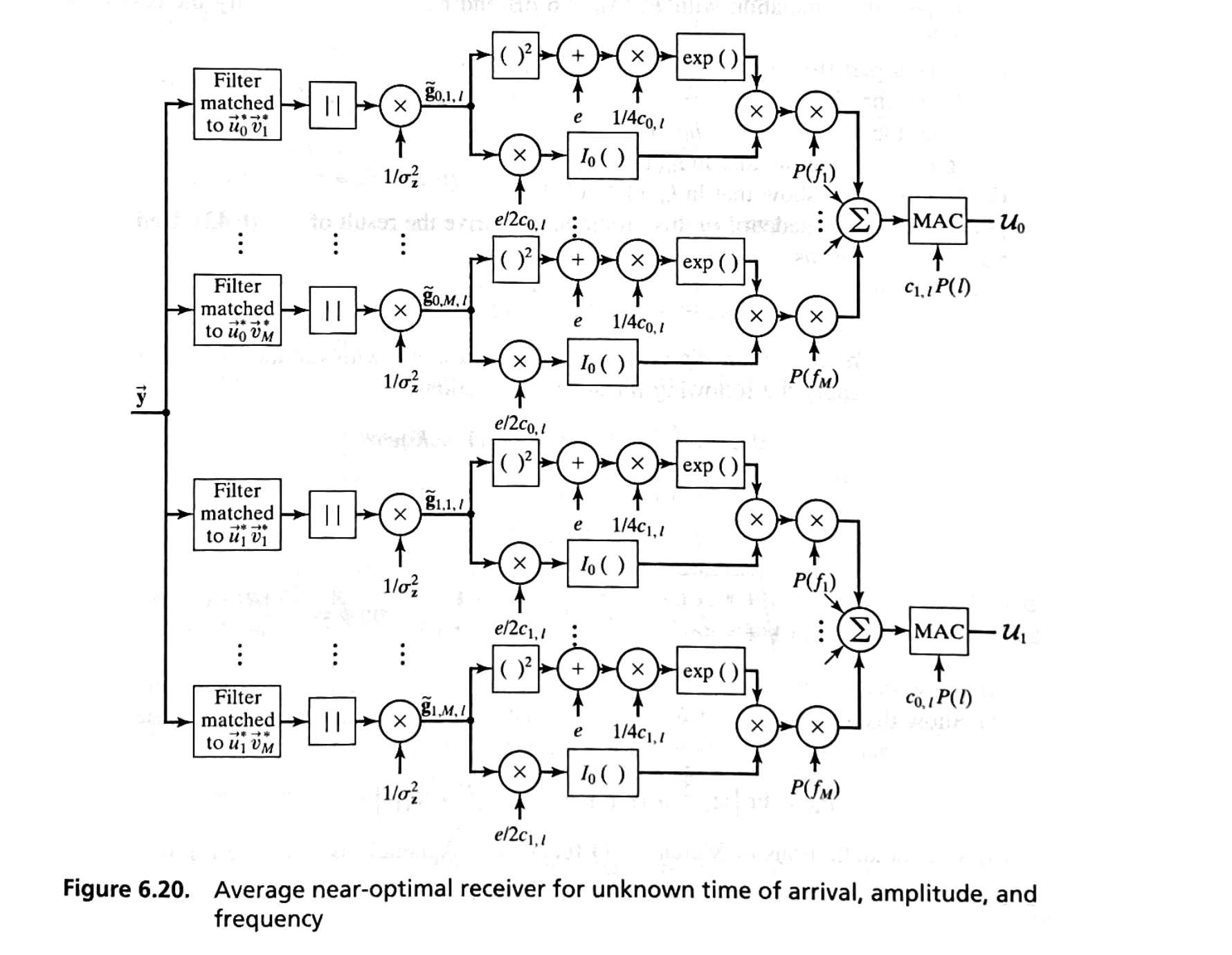

Figure above displays the conceptual problem.

\( y_i(t) \) extends over the time interval \( (t_0, t_0 + T) \).

However, \( t_0 \) is itself unknown and is characterized by its probability density function \( p(t_0) \), which in the figure is shown to extend over a finite interval \( (t_A, t_B) \).

There are no theoretical limitations on \( t_A \) or \( t_B \), but practical considerations can often be used to estimate the earliest or latest times the signal could arrive \( (t_A \) and \( t_B \), respectively).

# Plot to illustrate multipath fading channel phenomenon

# Parameters

t = np.linspace(0, 10, 1000) # Time vector

paths = [1, 0.8, 0.5, 0.3] # Relative amplitudes of paths

delays = [0, 1, 3, 5] # Delays in time for each path

colors = ['blue', 'green', 'orange', 'cyan']

# Composite signal (sum of multipath components)

composite_signal = np.zeros_like(t)

# Plot the individual paths and composite signal

plt.figure(figsize=(12, 6))

for i, (amp, delay, color) in enumerate(zip(paths, delays, colors)):

path_signal = amp * np.sin(2 * np.pi * 0.5 * (t - delay)) * (t >= delay)

composite_signal += path_signal

plt.plot(t, path_signal, label=f"Path {i+1} (Delay: {delay}s, Amp: {amp})", linestyle="--", color=color)

# Plot the composite signal

plt.plot(t, composite_signal, label="Supperimposed Signal", color="red", linewidth=3)

# Labels and legend

plt.title("Illustration of Multipath Fading Channel", fontsize=14)

plt.xlabel("Time (s)", fontsize=12)

plt.ylabel("Amplitude", fontsize=12)

plt.axhline(0, color="black", linewidth=0.5, linestyle="--")

plt.legend()

plt.grid()

plt.show()

Noncoherent Receiver#

In developing receiver structures, we first assume that the attenuation \( \alpha \) and frequency shift \( f_d \) are known.

Under these conditions, the frequency shift \( f_d \) can be set equal to zero with no loss of generality.

The phase \( \beta \), on the other hand, is assumed to be uniformly distributed on the interval \( (0, 2\pi) \).

As a result of these assumptions, noncoherent receiver structures are obtained.

Unknown Time of Arrival, Known Amplitude and Frequency#

Using a similar approach to that developed for unknown phase section, we have \( k \) samples over the interval \( T \).

However, to account for the unknown time of arrival, we will quantize \( t_0 \) to occur at \( t_l \).

The \( j \)-th sample under hypothesis \( H_i \) is

which can be written in vector notation as

where

Averaging over the random phase \( \beta_i \) as in preceding sections, we can write the conditional density function of \( \vec{y} \) given \( t_l \) as

Since we are dealing with sampled systems, we quantize the times \( t_A \) and \( t_B \) and associate the sample numbers \( l_A \) and \( l_B \) with them.

Correspondingly, we denote the probability

It follows that we can approximate the unconditional density function of \( \vec{y} \) as

In general, at this point we would calculate the likelihood ratio \( L(\vec{y}) = \frac{p_1(\vec{y})}{p_0(\vec{y})} \) and determine receiver structures from it.

Unfortunately, this approach does not lead to practical structures.

If, on the other hand, we return to \(p_i(\vec{y} | t_l)\), we can define a conditional likelihood function as

or, better yet, a conditional log-likelihood function as

from which we can derive an average near-optimal receiver from the equation

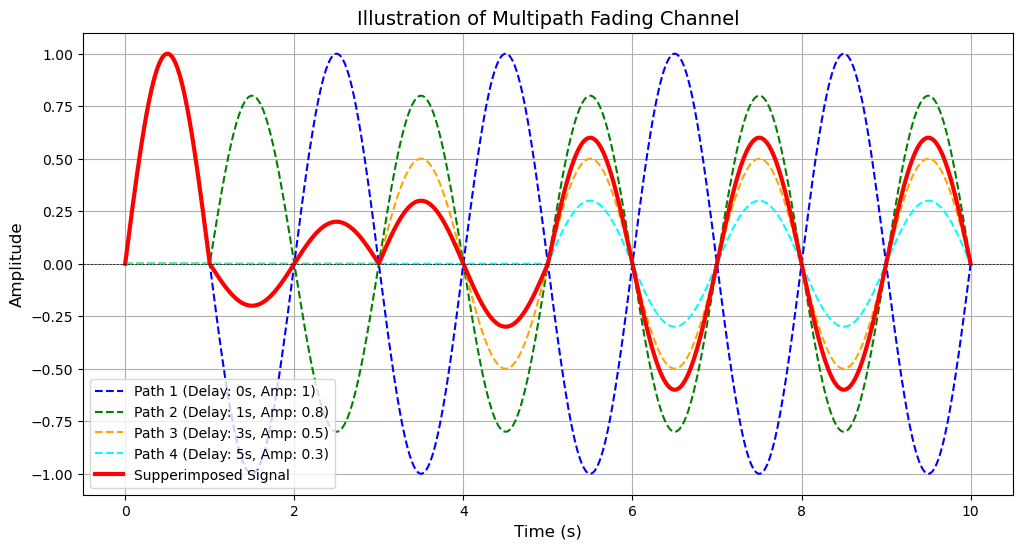

Figure 6.17 shows such a receiver.

The sampled inputs \( y_j \) are processed by two digital matched filters, each producing sampled outputs represented as \( \{ X_{i,l} \} \), \( i = 0, 1 \), where

\[ X_{i,l} = \sum_{j=l}^{l+k} y_j u_{ij}^*, \quad i = 0, 1 \]The magnitude of each sample is determined, scaled by the factor \( \alpha / \sigma_z^2 \), and then processed by a \( \ln I_0(\cdot) \) nonlinearity.

Then, a predetermined bias is removed from each sample.

The bias is represented by

\[ Y_{i,l} = \frac{\alpha^2}{2 \sigma_z^2} \sum_{j=l}^{l+k} |u_{ij}|^2, \quad i = 0, 1 \]Finally, all the samples are weighted by \( P(l) \) and accumulated, producing the decision variables \( U_1 \) and \( U_0 \).

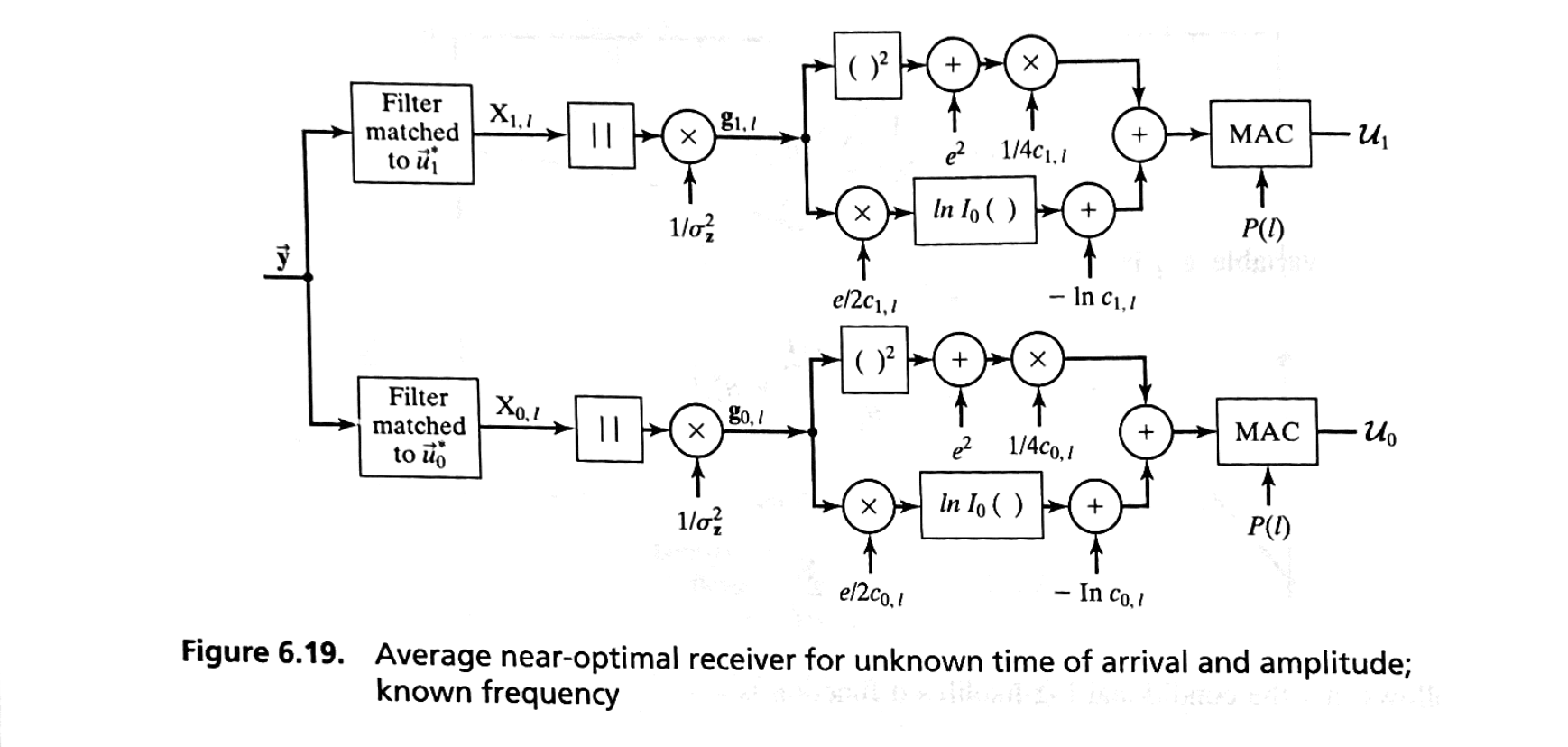

Unknown Time of Arrival and Amplitude—Known Frequency#

In this case, we write the conditional probability as a conditional pdf over \( \alpha \) and \( t_l \):

We assume that the amplitude density function is a Rician.

We then average the conditional probability over it produces

where

the random variable \( g_{i,l} \) is

and

It follows that the conditional log-likelihood function is

and an average near-optimal receiver can be found by averaging over the random variable \( t_l \) from \( l = l_A \) to \( l = l_B \).

This is given in the following decision variable,

and a functional block diagram is

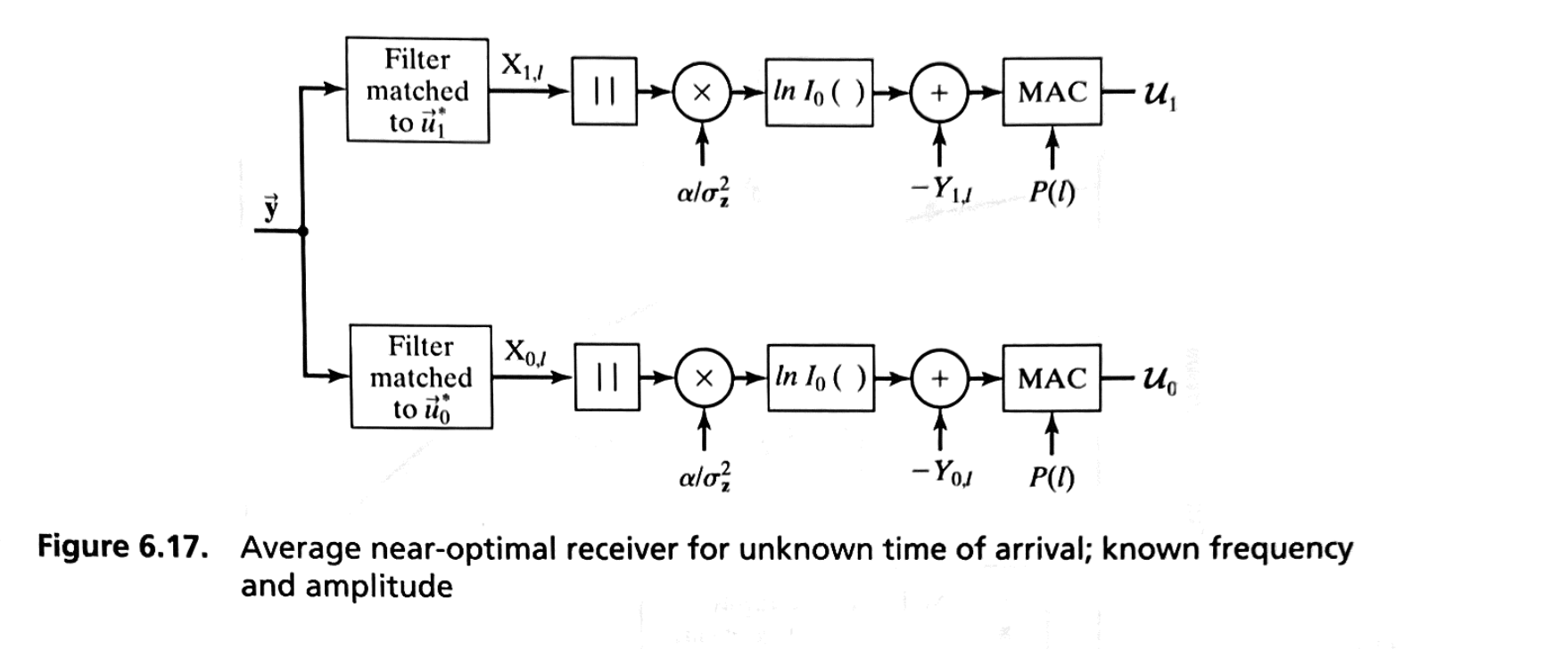

Unknown Time of Arrival, Amplitude, and Frequency#

This section closely follows the development and nomenclature of Section of Unknown Frequency.

Using the development of \(p_i(\vec{y} | \alpha, f_d)\), we can write the conditional density function as

which, after averaging over the Rician variable \( \alpha \), is

where \( c_{i,l} \) and \( e \) are defined previously as

and

and the random variable \( \tilde{g}_{i,l} \) is

and

Using approximations for the pdf of \( f_d \), similar to those of Eqs. (6.89) and (6.90), we can average over \( f_d \) to obtain

From this, the conditional likelihood function can be written as

and an average near-optimal receiver can be determined by averaging over \( t_l \), where

and \( v_{jm} = e^{j 2\pi f_m t_j} \).

This receiver has two decision variables, \( U_1 \) and \( U_0 \), and is shown functionally in Figure 6.20.